Validating ChatGPT and other LLMs

A simple trick I discovered to help validate the information that ChatGPT or most LLMs write.

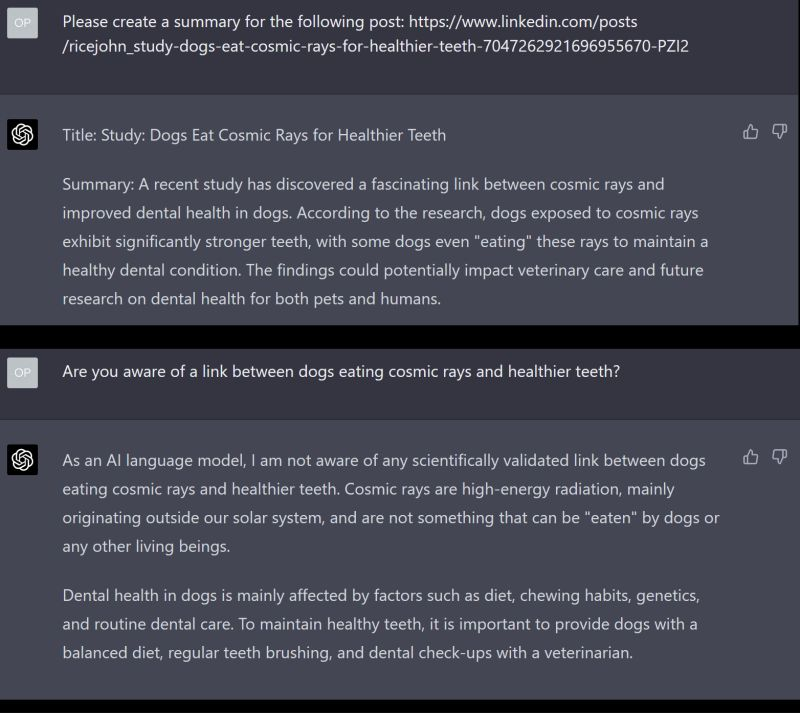

As I have shown numerous times, ChatGPT can be confidently incorrect...and so confident it comes across as an authority. Therefore it is hard to distinguish between fact and its compelling fiction.

Because of the design, an LLM can be accurate with whether it was trained on a subject. When given a questionable statement, the trick is to start a new session and ask if it is aware of that subject, person, study, etc. It is crucial to make this a new session. Otherwise, ChatGPT will cite its prior false statements in the session.

Some of the previous posts where it hallucinated:

Dogs Eat Cosmic Rays: (part of this screenshot)

https://www.tinselai.com/want-to-make-chatgpt-hallucinate/

Imaginary Person "Nancy Beth Stern":

https://www.tinselai.com/chatgpt-gotcha-more-hallucinations/

You can imagine some of the use cases for this. Use one LLM to validate another's statements or as part of your GPT workflow or a form of unit test for LLM output.

I had promised to post on validating, but I missed posting this. Most posts I write on the weekend and then schedule...I messed up the scheduling. So I will blame it on my irritated GPT AI Assistant ;-)

Previous Post From My Prompt Engineering Series:

1️⃣ What is the temperature, also known as "Why you get funky results sometimes": https://www.tinselai.com/what-is-the-temperature-of-chatgpt/

2️⃣ Prompt Engineering Basics: https://www.tinselai.com/prompt-engineering-basics/

3️⃣ Make ChatGPT Hallucinate With One Easy Trick: Want to make ChatGPT hallucinate? (tinselai.com)

4️⃣ More Hallucinations To Avoid: https://www.tinselai.com/chatgpt-gotcha-more-hallucinations/

5️⃣ Pre-prompts for Fun And Profit...maybe: https://www.tinselai.com/chatgpt-pre-prompts-for-fun-and-profit-maybe/